Abstract

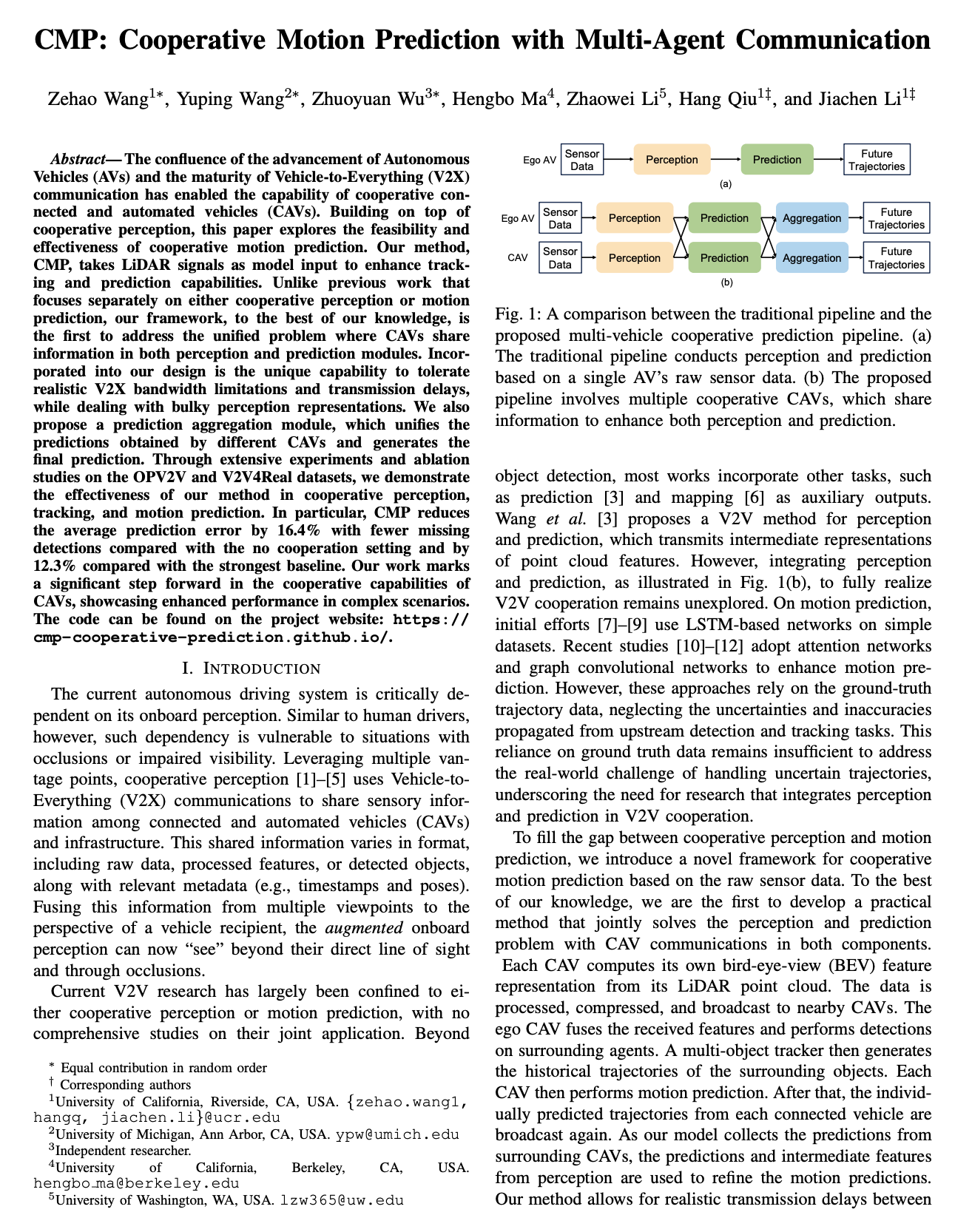

The confluence of the advancement of Autonomous Vehicles (AVs) and the maturity of Vehicle-to-Everything (V2X) communication has enabled the capability of cooperative connected and automated vehicles (CAVs). Building on top of cooperative perception, this paper explores the feasibility and effectiveness of cooperative motion prediction. Our method, CMP, takes LiDAR signals as model input to enhance tracking and prediction capabilities. Unlike previous work that focuses separately on either cooperative perception or motion prediction, our framework, to the best of our knowledge, is the first to address the unified problem where CAVs share information in both perception and prediction modules. Incorporated into our design is the unique capability to tolerate realistic V2X bandwidth limitations and transmission delays, while dealing with bulky perception representations. We also propose a prediction aggregation module, which unifies the predictions obtained by different CAVs and generates the final prediction. Through extensive experiments and ablation studies on the OPV2V and V2V4Real datasets, we demonstrate the effectiveness of our method in cooperative perception, tracking, and motion prediction. In particular, CMP reduces the average prediction error by 16.4% with fewer missing detections compared with the no cooperation setting and by 12.3% compared with the strongest baseline. Our work marks a significant step forward in the cooperative capabilities of CAVs, showcasing enhanced performance in complex scenarios.

Key Ideas and Contributions

1) Practical, Latency-robust Framework for Cooperative Motion Prediction: our framwork integrates cooperative perception with trajectory prediction, marking a pioneering effort in the realm of connected and automated vehicles, which enables CAVs to share and fuse data from LiDAR point clouds to improve object detection, tracking, and motion prediction.

2) Attention-based Prediction Aggregation: prediction aggregator take advantage of the predictions shared by other CAVs, which improves prediction accuracy. This mechanism is scalable and can effectively handle varying numbers of CAVs.

3) State-of-the-art Performance in cooperative prediction under practical settings on the OPV2V and V2V4Real datasets: our framwork evaluated on both simulated V2V datasets and real world V2V scenarios, and outperforms the cooperative perception and prediction network proposed by the strongest baseline V2VNet.

Quantitative Results of Cooperative Prediction with Ablation